April 18, 2019

Online shopping brings many conveniences but one challenge for shoppers is determining which size of clothing to buy. Without the opportunity to try on clothing it’s difficult for shoppers to determine which size of clothing to purchase.

Sarina Sit and Ramya Prasad (both Year 4 CompE students), set out to alleviate some of these challenges with their fourth-year capstone project. Their project was one of 85 projects designed by fourth year students from The Edward S. Rogers Sr. Department of Electrical & Computer Engineering (ECE) and displayed from April 2 to April 4, 2019 at the Capstone Design Fair. Select projects were invited to display their projects at ECE’s annual Design Showcase on Friday April 5, 2019.

“Gauging clothing sizes is a big challenge for online shoppers: even though two pieces of clothing from different stores might have the same numerical size — there’s no guarantee they will fit the same,” said Prasad. “We used convolutional neural networks to detect, classify and segment people in a photo that allowed us to predict and join locations of body parts to provide measurements within about five centimetres of accuracy.”

The team developed an iOS mobile application that predicts the body measurements of various body parts using just a photo taken from an iPhone. While online shopping is an obvious area where this type of technology can be applied, the group also cites furniture measurements and pregnancy tracking as possible future applications for their work.

Augmented Reality (AR) allows a user to interact with different worlds where computer generated sensory data is overlaid with a user’s perception of the real-world. However, traditional AR has failed to allow multiple users to seamlessly interact within the same virtual world. Seunghyun Cho, Edwin Lee (both Year 4 CompE) and Andrew Maksymowsky (Year 4 ElecE) set out to design a framework that could facilitate the development of multi-user AR applications.

“Humans are social creatures, so it makes sense to create AR applications where they can interact with each other in one virtual world,” said Cho. “We saw an opportunity to explore an emerging field and though gaming is one application where this could be useful, other interesting use-cases could be in classrooms where an instructor could work with a model of something like a human heart and students could follow along and interact from their own devices in real time.”

The team’s approach involved local processing and rendering of the AR visuals on the user’s mobile device, with a server facilitating the synchronization of the virtual world. To overcome the challenges related to synchronizing multiple world views and building a common coordinate system, they made use of Cloud Anchors to provide a common frame of reference. From there, they used a powerful physics and visuals engine to build the three-dimensional virtual world with objects being described by their relative position to the Cloud Anchor.

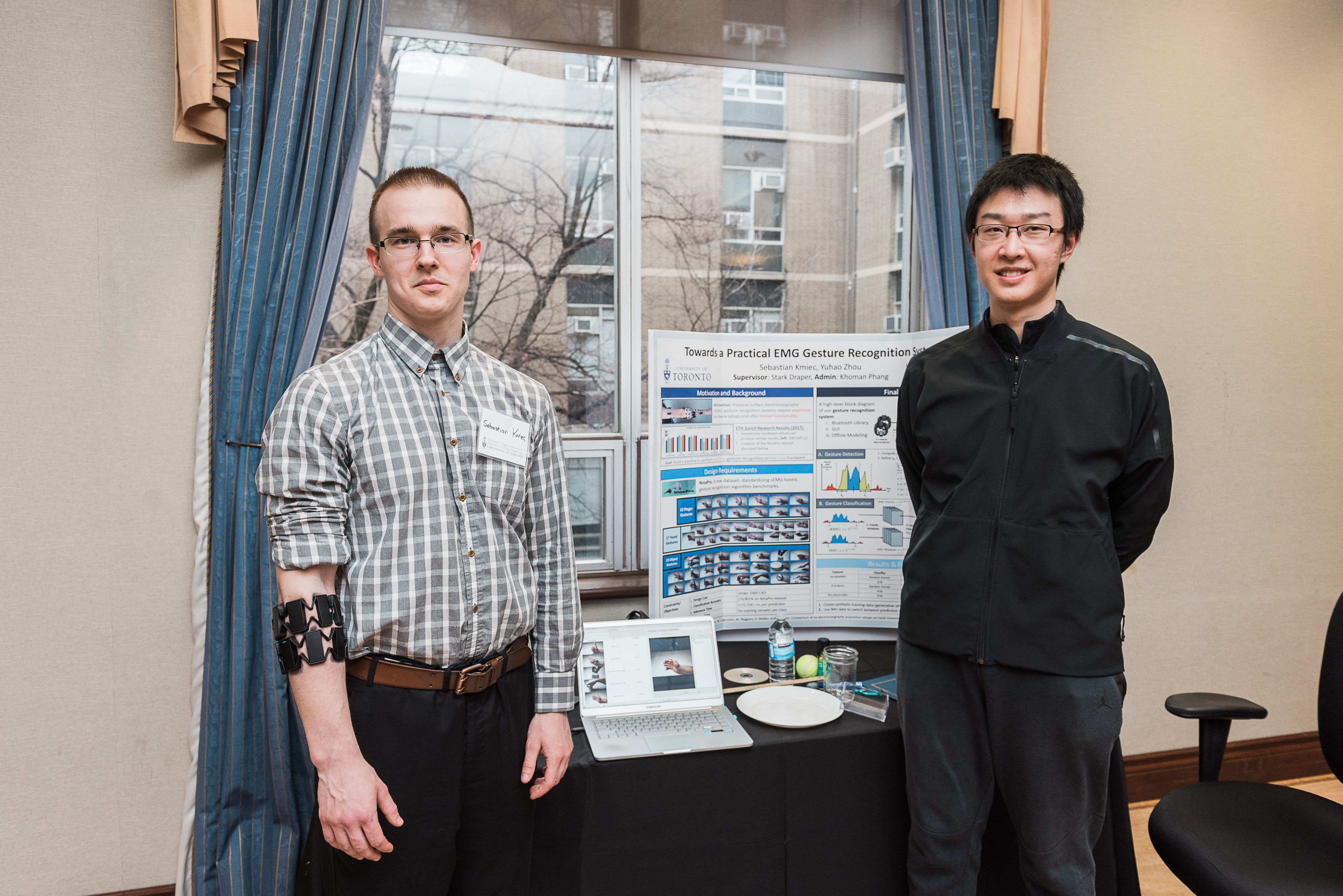

Surface electromyography (sEMG) is a technique used to detect, record and interpret the electrical activity of muscles by placing electrodes on the skin overlaying muscles of interest. One application of sEMG data is in prostheses, where a user’s muscle activity directly below the measuring device, could control the movement of a prosthesis. Modern systems require expensive hardware and provide limited functionality for the user. Sebastian Kmiec and Yuhao Zhou (both Year 4 ElecE) set out to create an EMG gesture recognition system with their capstone project.

“Our goal was to create a low-cost system that would aid the control of forearm prostheses,” says Kmiec. “We wanted to build something that could perform real-time gesture recognition with very high classification accuracy, using a limited amount of data — about six samples per class.”

To do this, Kmiec and Zhou designed feature extraction methods and artificial neural networks systems to classify EMG signals in real-time using low-cost hardware. Their final design boasted gesture detection accuracy within 100ms, and classification accuracy between 89 and 96 percent on the NinaPro dataset and their own dataset respectively — well above the standard sEMG-based gesture recognition algorithm benchmarks.

“This year’s projects draw from so many areas of ECE; they highlight innovations that our undergraduate students are making in assistive technology, machine intelligence, and applied research,” said Professor Khoman Phang, the course coordinator. “It’s really exciting to see all the work that the student teams have put in over the past year come together in the presentations we’ve see here today.”

See and save photos from the Design Fair and final Design Showcase

See and save photos from the 4th Year & Alumni Event

More information:

Jessica MacInnis

Senior Communications Officer

The Edward S. Rogers Sr. Department of Electrical & Computer Engineering

416-978-7997; jessica.macinnis@utoronto.ca