JUNE 7, 2023 • By Matthew Tierney

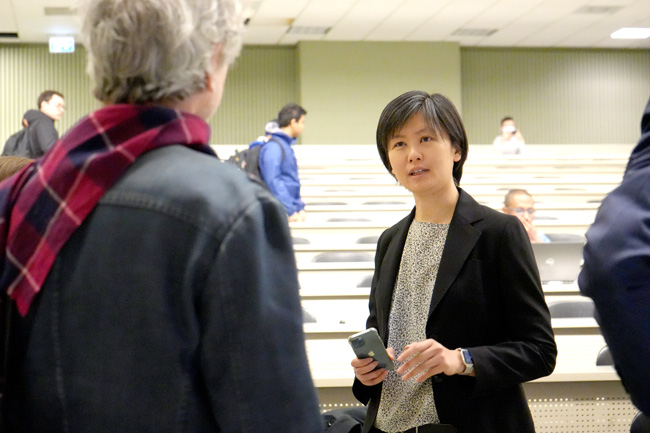

On May 17, 2023, a day-long symposium led by ECE professor Joyce Poon brought together thought leaders from academia and industry to share ideas on how to design the next generation of hardware engines in a world where AI is driving demand to new levels.

Poon, who is also a Director at the Max Planck Institute of Microstructure Physics in Germany, says that the industry needs nothing less than a revolution, given the computational bottlenecks of large machine learning (ML) models.

“The demand for computing performance is doubling every two months, but hardware performance is doubling only every two or three years,” she says. “The sustainability of the whole machine intelligence age is in question.”

The Compute Acceleration Symposium was held in May concurrently with the SiEPIC-CMC Microsystems Active Silicon Photonics Fabrication Workshop and included talks from experts across industry and academia, including Christian Weedbrook of Xanadu, Dirk Englund of MIT and QuEra, as well as U of T professors Andreas Moshovos (ECE), Tony Chan Carusone (ECE, Alphawave) and Francesco Bova (Rotman), among many others.

Everything from photonics-integrated circuits to quantum computing was on the table as a potential path forward, with consideration of how these systems and networks might fit together.

The final session of the day was a roundtable that discussed the business needs and opportunities of a hardware breakthrough.

The processing units in today’s computers are built with a modern transistor technology called complementary metal–oxide–semiconductor (CMOS). For half a century, this technology followed Moore’s Law, which predicts that the number of transistors that it is possible to print onto an integrated circuit will double every two years for ever-more powerful computation.

But starting about ten years ago, the speed of advances began to slow, to the point where many experts now believe Moore’s Law to be at an end. At the same time, the enormous amounts of data required to train AI models are putting put a strain on the communication and memory bandwidth of CMOS transistors, not to mention their energy consumption.

“The carbon footprint needed to train a large language model like ChatGPT3 is equivalent to about 150 roundtrips between Toronto and Beijing, and it costs millions of dollars,” says Poon. “That means such models are reserved for the very big companies at this point.”

Both Google and Microsoft are in the process of rolling out AI-powered search engines.

One potential hardware solution to more computing density and energy efficiency lies in the medium of transmission — not electrons, but photons. Optical circuits free hardware designers from the limits of Moore’s Law, and because they can be fabricated on silicon wafers, they can use existing microelectronic foundries, a clear advantage for scalability. But the integration of the fibres and lasers on the chip, what’s called the ‘packaging,’ is less sophisticated than CMOS and very costly.

Another solution is quantum computing, which holds promise for certain kinds of large computational problems — but the technology requires specialized equipment and the infrastructure for a broader deployment is limited.

“There doesn’t seem to be a clear path to a new general-purpose machine,” says Poon. “It’s time to get serious about looking for a truly novel way of computing. For this you need investments in infrastructure, state-of-the-art labs, new materials, new chips, all of which are costly, ambitious endeavours.”

Poon notes that the Biden administration’s CHIPS and Science Act of 2022 has galvanized scientists and engineers south of the border to develop a homegrown, sustainable semiconductor economy.

“In the United States, and in Europe, they are putting in not tens but hundreds of billions of dollars to build up the microchip ecosystem,” says Poon. “It’s the conversation everyone is having.

“Canada has a lot of good ingredients to be a leader in this area. There’s been many discussions at the federal level, companies are strategizing and submitting position papers to government, lobby groups and national organizations are getting involved. I believe the time is right for Canada to launch a major R&D centre.”

Poon envisions a private and publicly funded facility, much like the Vector Institute for Artificial Intelligence, that brings together researchers from many universities alongside industry to find a role for Canada in this global push.

“Research benefits immensely from cross-pollination, and symposiums like the one Professor Poon organized can prove invaluable to participants,” says ECE Chair Professor Deepa Kundur. “Time and again these things provide momentum for larger research initiatives or projects.”

The day after the symposium, Poon and ECE professor Stark Draper flew to the inaugural North America Semiconductor Conference in Washington, D.C., as part of the Canadian delegation to discuss semiconductor supply chain issues and strategy.

“The lesson from the pandemic is, you’ve got to have some capabilities yourself,” says Poon. “If Canada has nothing to offer to the world, then we’ll have no chips. It would be disastrous for the economy and national security.”