March 29, 2021

By Matthew Tierney

Researchers from the Edward S. Rogers Sr. Department of Electrical & Computer Engineering (ECE) and LG AI Research have developed a novel explainable artificial intelligence (XAI) algorithm that can help identify and eliminate defects in display screens.

The algorithm, which outperformed comparable approaches on industry benchmarks, could potentially be applied in other fields, such as the interpretation of data from medical scans. It is the first AI achievement to emerge from a collaboration between LG and University of Toronto in AI research, an investment that LG recently committed to expanding.

The team includes Professor Kostas Plataniotis (ECE), recent graduate Mahesh Sudhakar (MEng 2T0) and ECE Master’s candidate Sam Sattarzadeh, as well as researchers led by Dr. Jongseong Jang at LG AI Research Canada, part of the company’s global research-and-development arm.

“Explainability and interpretability are about meeting the quality standards we set for ourselves as engineers and demanded by the end user,” says Plataniotis. “With XAI, there’s no ‘one size fits all.’ You have to ask whom you’re developing it for. Is it for another machine learning developer? Or is it for a doctor or lawyer?”

XAI is an emerging field, one that addresses issues with the ‘black box’ approach of machine learning (ML) strategies.

In a black box ML model, a computer might be given a set of training data in the form of millions of labelled images. By analyzing this data, the ML algorithm learns to associate certain features of the input (images) with certain outputs (labels). Eventually, it can correctly attach labels to images it has never seen before.

The machine decides for itself which aspects of the image to pay attention to and which to ignore. But it keeps that information secret — not even its designers know exactly how it arrives at a result.

This approach doesn’t translate well when ML is deployed in non-traditional areas such as health care, law and insurance. These human-centred fields demand a high level of trust in the ML model’s results. Such trust is hard to build when nobody knows what happens inside the black box.

“For example, an ML model might determine a patient has a 90% chance of having a tumour,” says Sudhakar. “The consequences of acting on inaccurate or biased information are literally life or death. To fully understand and interpret the model’s prediction, the doctor needs to know how the algorithm arrived at it.”

In contrast to traditional ML, XAI is a ‘glass box’ approach that makes the decision-making transparent. XAI algorithms are run simultaneously with traditional algorithms to audit the validity and the level of their learning performance. This approach also provides opportunities to carry out debugging and find training efficiencies.

Sudhakar says that, broadly speaking, there are two methodologies to develop an XAI algorithm, each with advantages and drawbacks.

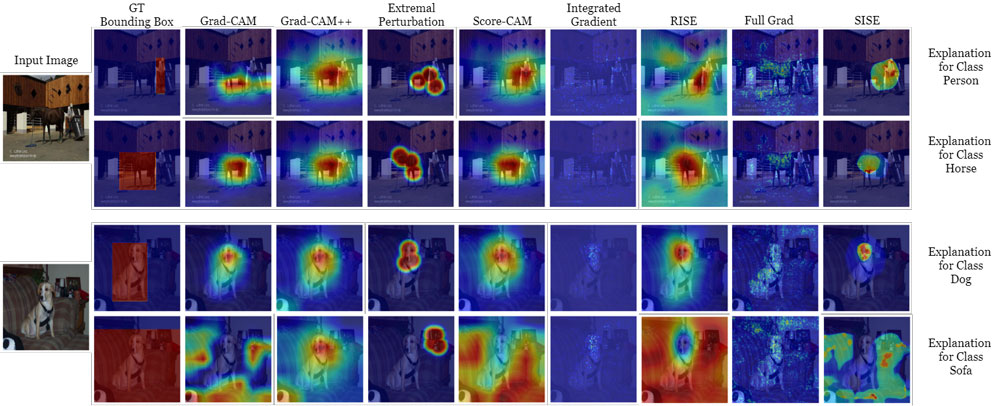

The first, known as backpropagation, relies on the underlying AI architecture to quickly calculate how the network’s prediction corresponds to its input. The second, known as perturbation, sacrifices some speed for accuracy, and involves changing data inputs and tracking the corresponding outputs to determine the necessary compensation.

“Our partners at LG desired a new technology that combined the advantages of both,” says Sudhakar. “They had an existing ML model that identified defective parts in LG products with displays, and our task was to improve the accuracy of the high resolution heat maps of possible defects while maintaining an acceptable run time.”

The team’s resulting XAI algorithm, Semantic Input Sampling for Explanation (SISE), is described in a recent paper for the Thirty-Fifth AAAI Conference on Artificial Intelligence.

“We see potential in SISE for widespread application,” says Plataniotis. “The problem and intent of the particular scenario will always require adjustments to the algorithm — but these heat maps or ‘explanation maps’ could be more easily interpreted by, for example, a medical professional.”

“LG’s goal in partnering with University of Toronto is to become a world leader in AI innovation,” says Jang. “This first achievement in XAI speaks to our company’s ongoing efforts to make contributions in multiple areas, such as functionality of LG products, innovation of manufacturing, management of supply chain, efficiency of material discovery and others, using AI to enhance customer satisfaction.”

ECE Chair Professor Deepa Kundur says successes like this are a good example of the value of collaborating with industry partners: “When both sets of researchers come to the table with their respective points of view, it can often accelerate the problem-solving. It is invaluable for graduate students to be exposed to this process.”

The team says that while it was a challenge to meet the aggressive accuracy and run-time targets within the fixed year-long project — all while juggling Toronto/Seoul time zones and working under COVID-19 constraints — the opportunity to generate a practical solution for a world-renowned manufacturer will not soon be forgotten.

“It was good for us to understand how exactly industry works,” says Sudhakar. “LG’s goals were ambitious, but we had very encouraging support from them, with feedback on ideas or analogies to explore. It was very exciting.”

More information:

Jessica MacInnis

External Relations Manager

The Edward S. Rogers Sr. Department of Electrical & Computer Engineering

416-978-7997; jessica.macinnis@utoronto.ca